LONDON: For a platform with at least 2.91 billion “friends,” Facebook has been creating a lot of enemies of late, even among its own ranks.

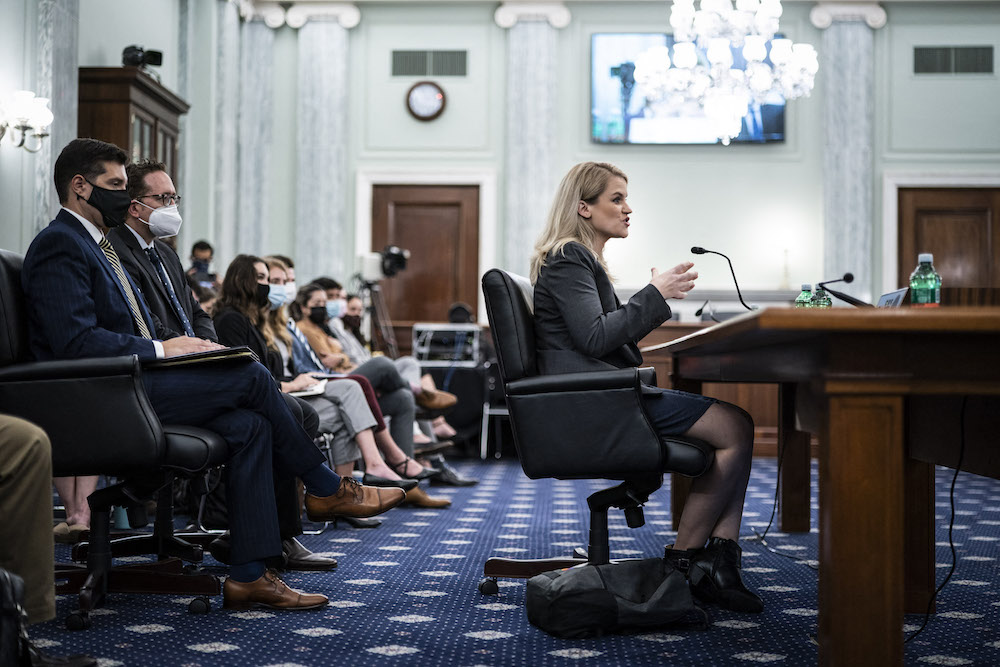

Just this week, former Facebook employee and whistleblower Frances Haugen testified before members of the US Senate, delivering a scathing overview of how the world’s largest social networking site prioritizes profits over public safety.

This is in spite of its own extensive internal research, leaked to US media, which demonstrates the harm that Facebook and its products are causing worldwide to communities, democratic institutions and to children with fragile body image.

Yet, precious little has been done in the Arab world, for instance, to hold Facebook and other social networking platforms to account for the extremist ideas, bigoted views and hate speech that continue to find their way to millions of users across the region despite their supposed policing of content.

“With even just a quick search in Arabic, I found 38 groups or pages currently on Facebook with over 100 followers or likes that feature unmistakable references in their titles to the Protocols of the Elders of Zion, the most infamous example of anti-Jewish disinformation and hate speech in history,” David Weinberg, Washington director for international affairs at the Anti-Defamation League, told Arab News.

“One would think that if Facebook were even casually interested in proactively searching for horrific hate speech that blatantly violates its terms of service and could lead to deadly violence, that these sorts of pages would have been an easy place for them to start.”

Indeed, although Facebook removed millions of posts featuring hate speech from its platforms in 2020, it still has a lot of ground to cover, especially in languages other than English.

“Facebook has not fixed the real problem. Instead, it has created PR stunts. What Haugen said exposed all their wrongdoing,” Mohamad Najem, the Beirut-based executive director of SMEX, a digital rights organization focusing on freedom of expression, online privacy and safety, told Arab News.

“Unfortunately, all these threats are increasing and tech companies are doing the minimum about it.”

Former Facebook employee and whistleblower Frances Haugen testifies during a Senate Committee on Commerce, Science, and Transportation hearing entitled 'Protecting Kids Online: Testimony from a Facebook Whistleblower' on Capitol Hill, October 05, 2021 in Washington, DC. (AFP)

Responding to the allegation on Friday, a Facebook spokesperson told Arab News: “We do not tolerate hate speech on our platforms. Which is why we continue to invest heavily in people, systems and technology to find and remove this content as quickly as possible.

“We now have 40,000 people working on safety and security at Facebook and have invested $13 billion into it since 2016. Our technology proactively identifies hate speech in over 40 languages globally, including Arabic.

“Whilst we recognize there is more work to do, we are continuing to make significant improvements to tackle the spread of harmful content.

“As our most recent Community Standards Enforcement Report showed, we’re finding and removing more hate speech on our platforms than ever before: the prevalence of hate speech — the amount of that content people actually see — on Facebook is now 0.05 percent of content viewed and is down by almost 50 percent in the last three quarters.”

Although Facebook has come under particular scrutiny of late, it is not the sole offender. The perceived laxity of moderation on microblogging site Twitter has also caused alarm.

Despite recently updating its policy on hate speech, which states that users must “not promote violence against or directly attack or threaten other people on the basis of race, ethnicity, national origin,” accounts doing just that are still active on the platform.

Major social media services including Facebook, Instagram and WhatsApp were hit by a massive outage on October 4, 2021, tracking sites showed, impacting potentially tens of millions of users. (AFP)

“For example, Iran’s supreme leader is permitted to exploit Twitter using a broad array of accounts, including separate dedicated Twitter accounts, for his propaganda, not just in Persian, Arabic and English but also in Urdu, Spanish, French, German, Italian, Russian and Hindi,” Weinberg said.

“Twitter also permits the accounts of major media organs of Iranian-backed violent extremist groups such as Hamas, Hezbollah and Palestinian Islamic Jihad. Even Facebook hasn’t generally been that lax.”

Indeed, accounts in the Arab world, such as those of exiled Egyptian cleric Yusuf Al-Qaradawi and designated terrorist Qais Al-Khazali — both of whom have been featured in Arab News’ “Preachers of Hate” series — remain active and prominent, with the former accumulating 3.2 million followers.

In one of his hate-filled posts, Al-Qaradawi wrote: “Throughout history, God has imposed upon them (the Jews) people who would punish them for their corruption. The last punishment was that of Hitler. This was a divine punishment for them. Next time, God willing, it will be done at the hands of the faithful believers.”

The failure to consistently detect hate speech in languages other than English appears to be a common problem across social networking sites.

As Haugen pointed out in her Senate evidence, Facebook has “documentation that shows how much operational investment there was by different languages, and it showed a consistent pattern of underinvestment in languages that are not English.”

Haugen left Facebook in May and provided internal company documents about Facebook to journalists and others, alleging that Facebook consistently chooses profit over safety. (Getty via AFP)

As a result, extremist groups have been at liberty to exploit this lax approach to content moderation in other languages.

The consensus among experts is that, in the pursuit of profits, social media platforms may have increased social division, inspired hate attacks and created a global trust deficit that has led to an unprecedented blurring of the line between fact and fiction.

“I saw Facebook repeatedly encounter conflicts between its own profits and our safety,” Haugen told senators during her testimony on Tuesday.

“Facebook consistently resolved these conflicts in favor of its own profits. The result has been more division, more harm, more lies, more threats and more combat. In some cases, this dangerous online talk has led to actual violence that harms and even kills people.

“As long as Facebook is operating in the shadows, hiding its research from public scrutiny, it is unaccountable. Until the incentives change, Facebook will not change. Left alone, Facebook will continue to make choices that go against the common good. Our common good.”

The influence of social media companies on public attitudes and trust cannot be overstated. For instance, in 2020, a massive 79 percent of Arab youth obtained their news from social media, compared with just 25 percent in 2015, according to the Arab Youth Survey.

Supporters of US President Donald Trump, including Jake Angeli, a QAnon supporter known for his painted face and horned hat, protest in the US Capitol on January 6, 2021. (AFP/File Photo)

Facebook and other popular Facebook-owned products, such as Instagram and WhatsApp, which experienced an almost six-hour global outage on Monday, have been repeatedly linked to outbreaks of violence, from the incitement of racial hatred in Myanmar against Rohingya Muslims to the storming of the Capitol in Washington by supporters of outgoing President Donald Trump in January this year.

The company’s own research shows it is “easier to inspire people to anger than to other emotions,” Haugen said in a recent CBS News interview for “60 Minutes.”

She added: “Facebook has realized that if they change the algorithm to be safer, people will spend less time on the site, they’ll click on fewer ads, they’ll make less money.”

Many have applauded Haugen’s courage for coming forward and leaking thousands of internal documents that expose the firm’s inner workings — claims that Facebook CEO Mark Zuckerberg has said are “just not true.”

In recent months, the social networking site has been fighting legal battles on multiple fronts. In Australia, the government has taken Facebook to court to settle its status as a publisher, which would make it liable for defamation in relation to content posted by third parties.

Russia, meanwhile, is trying to impose a stringent fine on the social media giant worth 5-10 percent of its annual turnover in response to a slew of alleged legal violations.

Although Facebook removed millions of posts featuring hate speech from its platforms in 2020, it still has a lot of ground to cover. (AFP/File Photo)

Earlier this year, the G7 group of nations, consisting of Canada, France, Germany, Italy, Japan, the UK and the US, signed a tax agreement stipulating that Facebook and other tech giants, including Amazon, must adhere to a global minimum corporate tax of at least 15 percent.

In Facebook’s defense, it must be said that its moderators face a grueling task, navigating the rules and regulations of various governments, combined with the growing sophistication of online extremists.

According to Jacob Berntsson, head of policy and research for Tech Against Terrorism, an initiative launched to fight online extremism while also protecting freedom of speech, terrorist organization have become more adept at using social networking platforms without falling foul of moderators.

“I think to be very clear, Facebook can certainly improve their response in this area, but it is very difficult when, for example, the legal status of the group isn’t particularly clear,” Berntsson told Arab News.

“I think it all goes to show that this is massively difficult, and content moderation on this scale is virtually impossible. So, there are always going to be mistakes. There are always going to be gaps.”

------------------

Twitter: @Tarek_AliAhmad